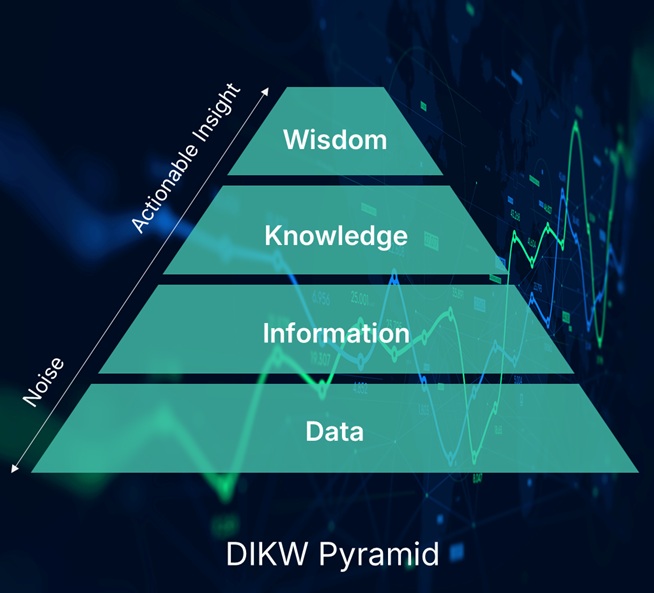

Data & Analytics - From noise to actionable insights

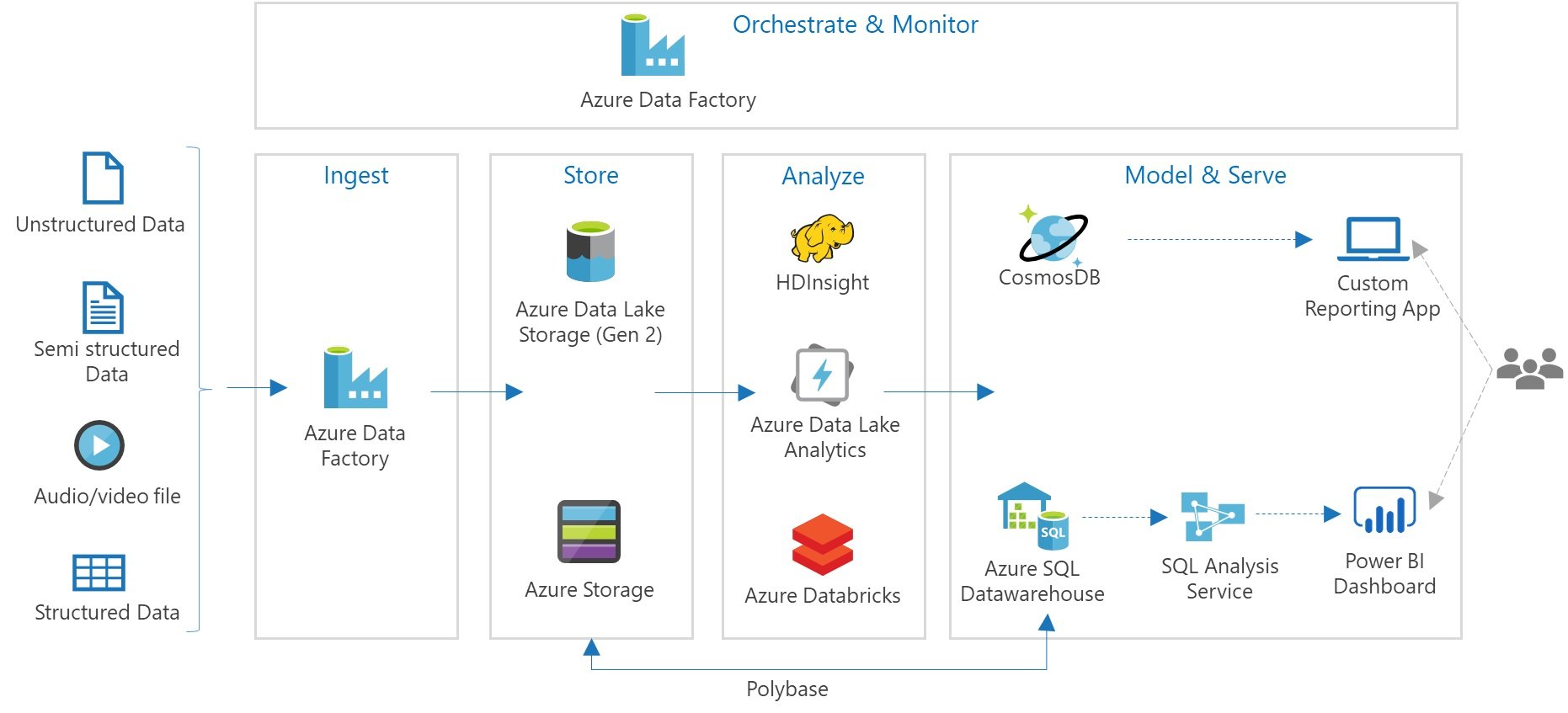

Take the data journey - Move from Data to Information to Knowledge and finally to Wisdom. Data is a powerful asset and should be treated as Product. Successful organizations are becoming "Data Driven Organizations" as they start their journey of AI. Build a strong enterprise data strategy. Utilize modern data architectues like data mesh, data fabric and data lakehouse. Secure your data, govern you data. Think about data quallity and lineage.